AI Insights for Independent schools,

July 2024

A monthly newsletter brought to you by Leon Furze,

in collaboration with Independent Schools Victoria.

9 min read

Welcome to the inaugural issue of 'AI Insights for Independent Schools', a monthly newsletter brought to you by Leon Furze in collaboration with Independent Schools Victoria.

As an international consultant, author and speaker with over 15 years of experience in education, I’m excited to share my insights on the rapidly evolving world of Generative AI in K-12 education. I am currently pursuing my PhD on the implications of GenAI on writing instruction and education, and have worked extensively with Independent Schools in Victoria and across Australia in 2023 and 2024.

The purpose of this monthly newsletter is to keep you informed about the latest developments in GenAI, provide practical resources for implementation in your classrooms, and offer guidance on the ethical considerations that come with this transformative technology. Whether you’re a technology early adopter or just beginning to explore AI in education, this newsletter aims to provide valuable insights for all educators in Independent schools.

Each month, we’ll explore new tools, share effective prompts and methods, discuss recent research and highlight professional development opportunities. We’ll also address the challenges and opportunities that GenAI presents, always with a focus on enhancing teaching and learning in our schools.

In this issue

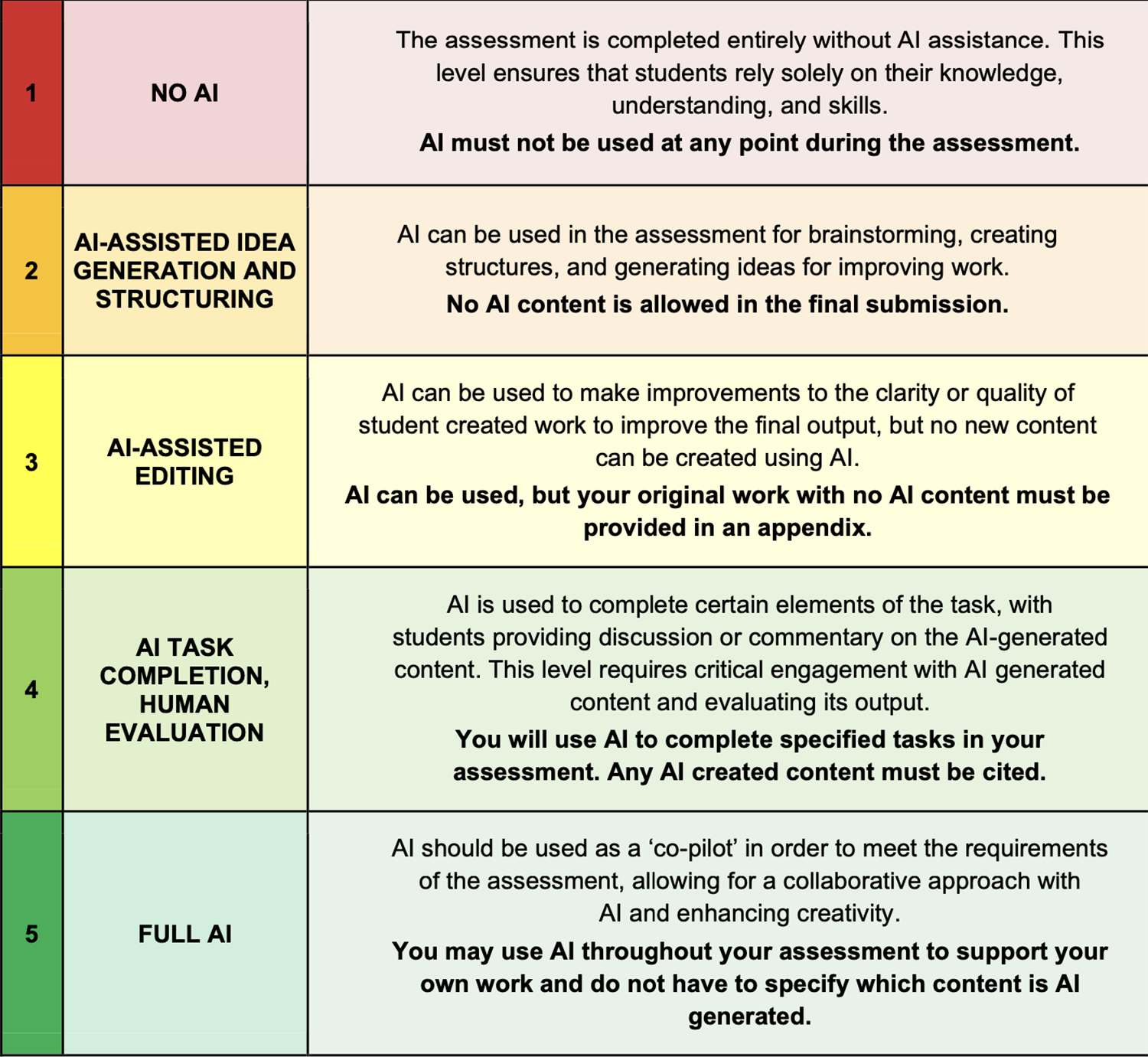

Spotlight: The AI Assessment Scale (AIAS)

This month, we’re putting the spotlight on a tool that’s gaining traction in both K-12 and tertiary education: the AI Assessment Scale.

Developed by Dr. Mike Perkins, Dr. Jasper Roe, Associate Professor Jason MacVaugh, and Leon Furze, the AI Assessment Scale (AIAS) offers a structured approach to incorporating AI into assessment practices. This scale provides a framework for educators to thoughtfully integrate AI into their assessment strategies, moving beyond the binary of ‘use AI’ or ‘don’t use AI’.

The AIAS consists of five levels:

- No AI: Traditional assessment without AI involvement

- AI Assisted Brainstorming: Using AI for idea generation

- AI Editing: AI for refining and polishing work

- AI + Human Evaluation: Working alongside AI throughout the process

- Full AI: AI generates the entire response, or significant parts of the whole

By using this scale, educators can design assessments that gradually introduce AI, allowing students to develop critical AI literacy skills while maintaining academic integrity.

Implementing the AIAS in your school:

- Start with faculty discussions about the role of AI in assessment

- Redesign assignments to align with different levels of the scale

- Clearly communicate expectations to students for each assessment

- Provide guidance on ethical AI use at each level

- Regularly review and adjust your approach based on outcomes

Case Study: Beaconhills College

Beaconhills College has successfully implemented the AIAS as part of their recent published school GenAI Guidelines. They’ve encouraged student engagement through focus groups, as well as staff professional development focused on the AI Assessment Scale and the need to rethink assessment for Generative AI.

Beaconhills College also invited parents to an online evening information session where Leon Furze discussed Generative AI, including how the AI Assessment Scale will be used to support academic integrity.

Try it yourself: The AIAS GPT

Perkins, Furze, Roe and MacVaugh have created a Custom GPT using OpenAI’s GPT-4o which allows users to interact with the AIAS. The custom chatbot contains all of the academic publications related to the AIAS, as well as a number of additional resources, examples, of the AIAS in action across different subject areas, and support materials.

You can ask the AIAS GPT questions about the Scale, or ask it to develop assessment tasks aligned to any of the five levels. You can also upload documents and PDFs such as existing assessment tasks, and have the AIAS GPT make recommendations based on the Scale and the research.

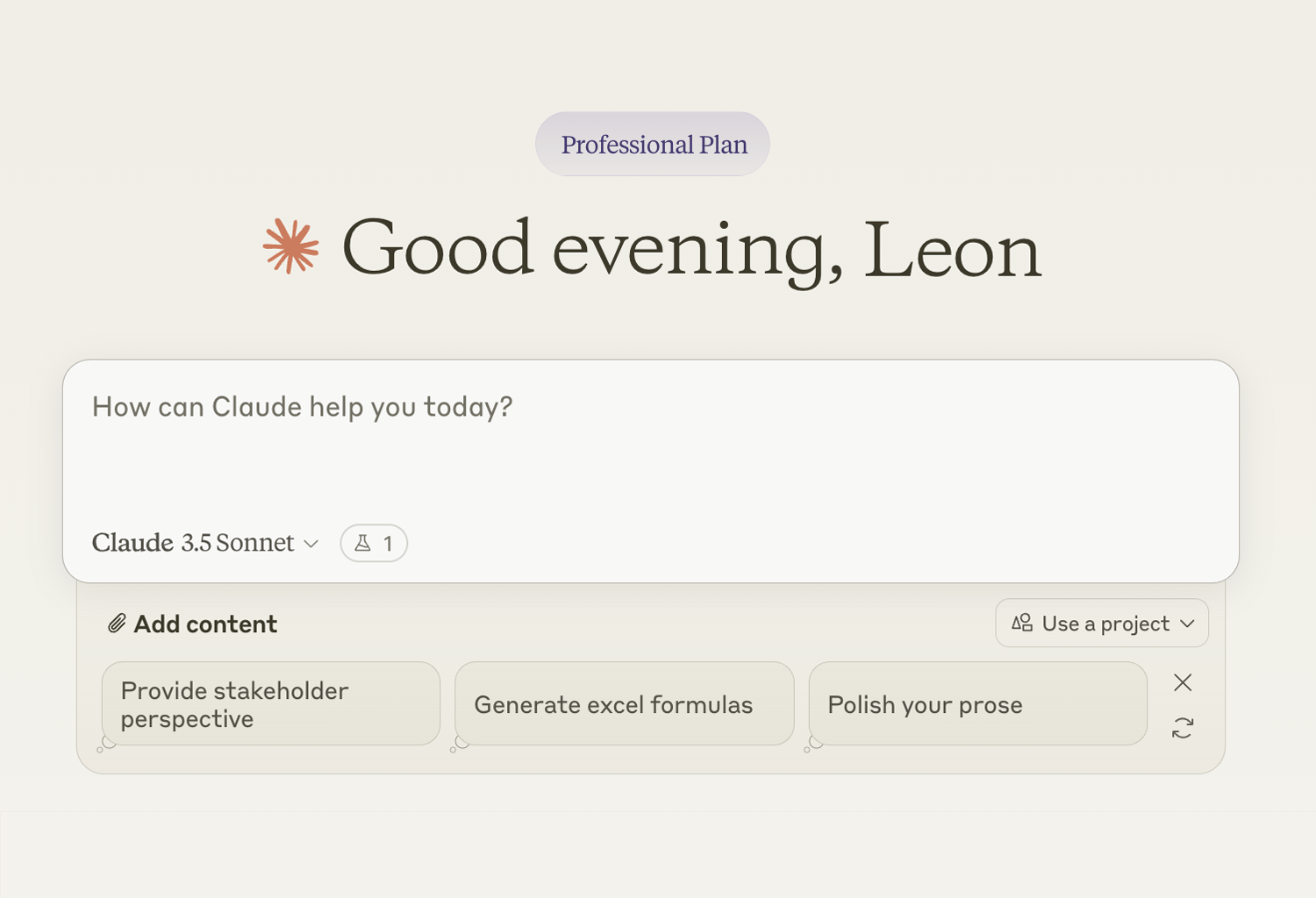

Application of the Month: Claude 3.5 Sonnet

This month, we’re also spotlighting a tool I’ve been particularly excited about: Anthropic’s Claude 3.5 Sonnet. As an AI assistant, Claude 3.5 Sonnet offers some impressive capabilities that can be particularly useful in educational settings.

Key features

1. Advanced language understanding

Claude 3.5 Sonnet excels at comprehending complex instructions and context, making it a powerful tool for research and writing assistance. It scores higher than GPT-4o on related benchmarks.

2. Multimodal capabilities

Although it can’t generate images, it can analyse images and documents, offering potential for diverse educational applications across subjects.

3. Ethical considerations

Claude is designed with a strong emphasis on ethical behaviour, making it a good choice for educational environments where safety and appropriate content are paramount.

4. New artifacts feature (free)

This allows Claude to create and reference working applications and code within a chat, meaning it can generate simple functioning websites and apps.

5. New projects feature (paid)

This paid feature enables users to save conversation history, add files, and give context instructions, making it easier to pick up where you left off on long-term projects.

Free vs. paid features

- Free: Chats, image analysis and the new Artifacts feature are available in the free version.

- Paid: The Projects feature, and much higher rates (the number of messages you can send during a chat).

Educational applications

1. Writing support

Use Claude to brainstorm ideas, outline essays, or provide feedback on student writing. Claude excels in writing and is superior to ChatGPT.

2. Research assistant

Claude can help summarise articles, explain complex concepts, and assist in literature reviews by helping to synthesise disparate ideas.

3. Lesson planning

Teachers can use Claude to generate creative lesson ideas or adapt existing plans for different learning needs. The Project feature, though paid, is excellent for this.

4. STEM problem-solving

Claude can walk students through problem-solving steps in math and science, explaining concepts along the way. It has excellent mathematical reasoning and coding skills.

5. Language learning

For EAL students, Claude can assist with translations, explanations of idioms, and cultural context. Like GPT, it is a multilingual large language model (LLM).

Ethical considerations

While Claude 3.5 Sonnet is a powerful tool, it’s crucial to use it responsibly in educational settings. Encourage students to use it as a learning aid rather than a replacement for their own critical thinking. Always verify information provided by AI, and use it in conjunction with human expertise and judgement.

In my own work, I’ve found Claude 3.5 Sonnet particularly useful for generating initial drafts of content, which I then heavily edit and refine. It’s also excellent for brainstorming and exploring different perspectives on complex topics.

Remember, the key to effectively using AI tools like Claude in education is to view them as supplements to, not replacements for, human teaching and learning. In our next issue, we’ll explore another tool that could be useful for both teachers and students.

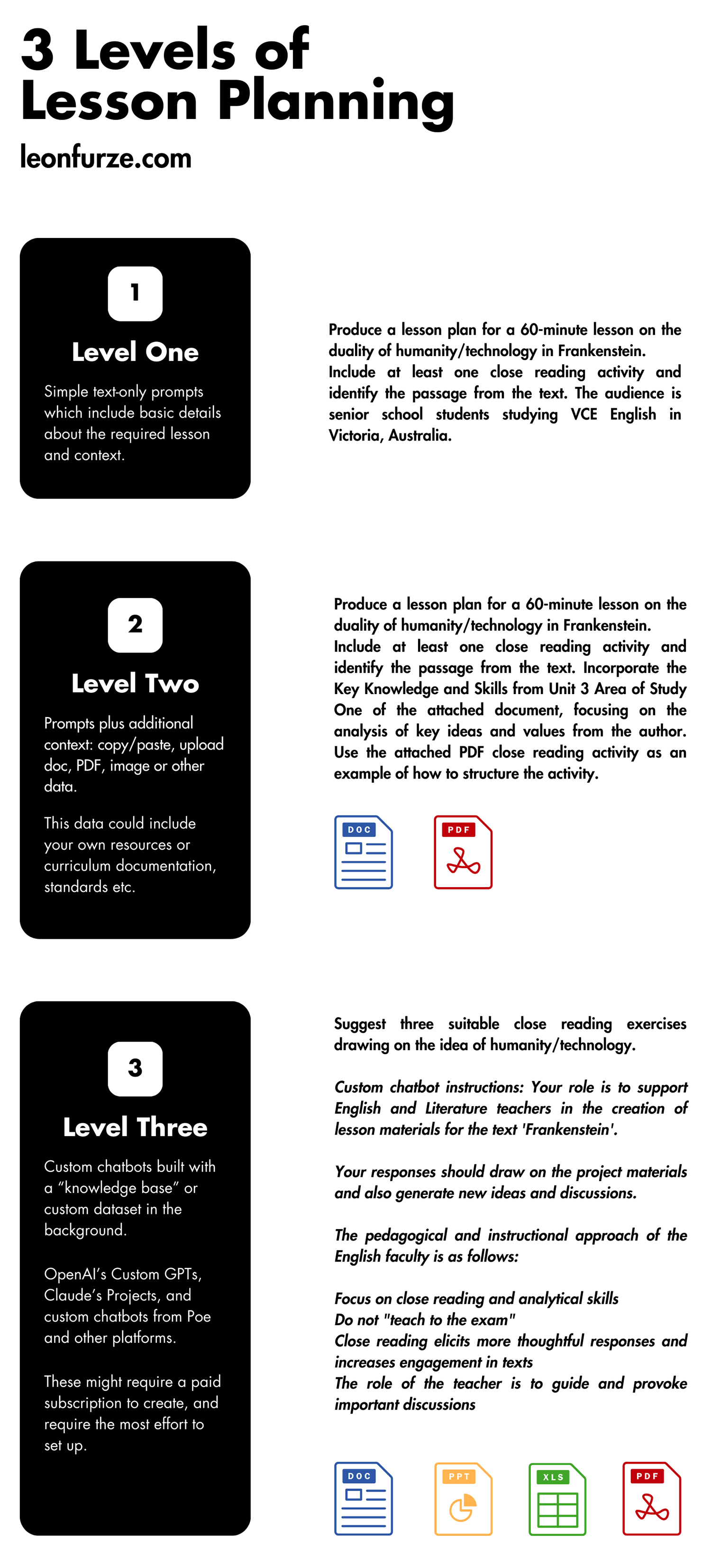

3 levels of prompting

Recently I wrote an article which looked at 3 levels of prompting for Generative AI, from the most basic to the most complex. As we get more familiar with these systems, I’m noticing a lot of educators move beyond simple prompts. Sometimes, a short, simple prompt in GPT or another model is all you need, but often you will need to add more details and contextual material to get what you want.

Based on that recent blog post, here are three levels of prompting for lesson planning using Generative AI:

Level 1: Basic Prompts

Use straightforward prompts in simple AI chatbots like ChatGPT. Avoid generic requests and instead provide specific details:

Example prompt: “Create a 60-minute lesson plan for Year 12 VCE English on the duality of humans and technology in Frankenstein. Include a brief introduction, main activity, and conclusion.”

Level 2: Adding Context

Enhance results by providing additional context through file uploads or longer text inputs. You can upload PDFs and Word Documents such as unit plans, existing resources as examples, or extracts of texts:

Example prompt (with attached curriculum document): “Using the attached VCE English study design, create a single 50-minute lesson aligned to these outcomes, focusing on cellular structure.”

Level 3: Custom Chatbots and Projects

For sophisticated results, use tools like Claude Projects or Custom GPTs. This requires paid subscriptions but offers more tailored outputs. I mentioned Claude Projects earlier in this issue, and the paid version of OpenAI’s ChatGPT includes a similar feature with the ability to make Custom GPTs (just like our AIAS example):

Example prompt (in a Claude Project with uploaded resources): “Based on our school’s inquiry-based learning model and the uploaded unit materials, design a series of three 45-minute lessons on climate change for Year 9 Science, incorporating hands-on experiments and group discussions.”

Remember, the goal is to augment your expertise, not replace it. Always review and adapt AI-generated content to ensure it meets your students’ needs and aligns with your teaching philosophy.

Research roundup: AI detection tools

A recent study by Perkins et al. (2024) investigated the effectiveness of AI text detectors in identifying machine-generated content, particularly when ‘adversarial techniques’ are applied to evade detection. The research, which involved testing 805 samples across six major Generative AI (GenAI) text detectors, revealed significant limitations in the accuracy and reliability of these tools.

Key findings

- Low baseline accuracy: Even without manipulation, AI detectors demonstrated a relatively low average accuracy rate of 39.5% in identifying AI-generated content (Perkins et al., 2024).

- Reduced accuracy with adversarial techniques: When adversarial techniques were applied to AI-generated text, the average accuracy of detectors dropped further to 22.14%, representing a 17.4% reduction in accuracy (Perkins et al., 2024).

- Variation in detector performance: The study found considerable differences in the performance of various AI detectors, with accuracy rates ranging from 4.5% to 58.7% when faced with manipulated content (Perkins et al., 2024).

- Effectiveness of evasion techniques: Some adversarial techniques, such as adding spelling errors and increasing textual “burstiness,” proved highly effective in evading detection (Perkins et al., 2024).

Implications for education

The findings of this study raise important concerns about the use of AI detection tools in academic settings. Previous research by Liang et al. (2023), as cited in Perkins et al. (2024), highlighted potential biases against non-native English speakers in AI detection systems. This issue, combined with the low accuracy rates and vulnerability to evasion techniques demonstrated in the current study, suggests that these tools may create barriers to inclusive assessment rather than promote academic integrity.

Furthermore, the study by Weber-Wulff et al. (2023), also referenced in Perkins et al. (2024), corroborates the findings on the susceptibility of AI detectors to various manipulation techniques, including machine translation and paraphrasing.

The results of this study underscore the need for a cautious and critical approach to implementing AI detection tools in education. The potential for false accusations and the ease with which these tools can be manipulated raise serious questions about their reliability in maintaining academic integrity. As Perkins et al. (2024) suggest, while these tools may have a role in supporting student learning when used non-punitively, they cannot be recommended for determining academic integrity violations.

Educators and institutions should consider alternative approaches to assessment that recognise the growing ubiquity of GenAI tools while ensuring fairness and inclusivity. This may involve rethinking traditional assessment methods and developing strategies that integrate AI tools into learning and assessment processes in ethical and transparent ways.

References

Liang, W., Yuksekgonul, M., Mao, Y., Wu, E., & Zou, J. (2023). GPT detectors are biased against non-native English writers. arXiv. http://arxiv.org/abs/2304.02819

Perkins, M., Roe, J., Vu, B. H., Postma, D., Hickerson, D., McGaughran, J., & Khuat, H. Q. (2024). GenAI Detection Tools, Adversarial Techniques and Implications for Inclusivity in Higher Education. arXiv:2403.19148 [cs.CY]. https://doi.org/10.48550/arXiv.2403.19148

Weber-Wulff, D., Anohina-Naumeca, A., Bjelobaba, S., Foltýnek, T., Guerrero-Dib, J., Popoola, O., Šigut, P., & Waddington, L. (2023). Testing of detection tools for AI-generated text. International Journal for Educational Integrity, 19(1). https://doi.org/10.1007/s40979-023-00146-z

Upcoming professional development

AI Leader Network

Join a network of AI leaders to build connections and learn from and with each other.

Independent Schools Victoria’s Artificial Intelligence (AI) Leader Network is designed to foster collaboration and communication among those with key oversight of AI at their school.

The network serves as a forum for leaders to come together, share insights and work towards enhancing the educational experience for all school communities.

Key takeaways:

- boost your leadership vision

- fresh and effective perspectives and ideas

- connection with fellow AI leaders

- actionable ideas to implement right away.

The first meeting will be held online on Wednesday 11 September, from 3.45 pm to 4.45 pm. ISV Member Schools only.

Q&A

Every month we’ll be inviting Independent school teachers and leaders to ask questions, which Leon will (try to) answer in the following issue. To send your questions, requests, or feedback, please contact Leon at leonfurze@gmail.com.

Stay tuned for the next edition in ISV’s Latest in Learning newsletter.