AI insights for Independent schools, September 2024

Deepfakes, the implications for education and more.

10 min read

Welcome to the September issue of AI Insights for Independent schools, your monthly guide to the rapidly changing world of Generative AI in K-12 education. This issue updates the AI Assessment Scale, and discusses the most pressing concern in Generative AI: deepfakes.

Last issue I discussed the University of Sydney’s ‘Two Lanes’ approach to assessment, and how to approach secure assessment tasks. I also put the spotlight on ChatGPT-4o, OpenAI’s most capable model. I also discussed the contentious topic of AI grading and assessment.

This month, I’ll focus on image generation with Adobe Firefly, and provide some updates to the AI Assessment Scale. I will also look at emerging research into the risks of deepfake technologies.

My ‘3 Levels of Prompting’ section will focus on teaching and learning with Adobe Firefly. While you can’t create (Level 3) custom chatbots with Firefly, you can upload files as reference images for style and composition, and it can be a great teaching tool.

As always, the aim is to provide you with practical insights, ethical considerations and actionable strategies to integrate these cutting-edge AI tools into your teaching practices. Whether you’re an AI early-adopter or just beginning to explore its potential in education, I’ve designed this newsletter to keep you make informed decisions with AI technologies.

In this issue

Spotlight: Updating the AI Assessment Scale

In issue one, I introduced the AI Assessment Scale (AIAS). For the past 12 months, we have been responding to the community and the rapid developments in the technology to update the Scale. We have just released our updated version, in time for lead author Mike Perkins to present in Paris for UNESCO Digital Week.

Key updates:

The scale has been updated to reflect current AI capabilities and educational needs:

- Level 1: No AI

- Level 2: AI for Planning

- Level 3: AI for Collaboration

- Level 4: Full AI

- Level 5: AI Exploration

- Level 2 now focuses on using AI for planning, initial composition and research. This is particularly relevant for early courses where foundational skills are being established.

- Level 3 emphasises AI for evaluation, feedback and the use of custom-designed course tutors. Students can use AI for writing development but must apply critical thinking to the output.

- Level 4 (previously Level 5) allows unrestricted use of AI, acknowledging the reality that sophisticated AI use is often undetectable.

- Level 5 is exploratory, encouraging students and educators to experiment with cutting-edge AI technologies, including AI agents, advanced multimodal systems and convergences with AR/VR and robotics.

A significant shift in the updated AIAS is the focus on assessment validity over traditional notions of assessment security. This change reflects the understanding that:

- Permitting any AI use effectively permits all AI use, given the sophistication and undetectability of current tools.

- True assessment security is only possible at Level 1 (No AI).

- Assessment validity is achievable across all levels when designed thoughtfully.

This perspective, influenced by recent work from Deakin University’s CRADLE and TEQSA in Australia, acknowledges that the distinctions between AI use levels are somewhat arbitrary given current technology. Instead, the focus should be on designing assessments that validly measure intended learning outcomes, regardless of AI involvement.

The new colour scheme moves away from the red-to-green gradient to avoid traffic light connotations. The new colours are distinct and chosen for accessibility, tested with online tools and individuals with colour blindness and low vision.

A new circular graphic has been introduced alongside the traditional table format. This circular design emphasises that all levels of the AIAS may be equally valid depending on the context, discipline, and assessment type.

These updates encourage educators to:

- Consider AI integration at various stages of the assessment process.

- Focus on designing assessments that validly measure learning outcomes, rather than trying to “AI-proof” assessments.

- Experiment with AI in ways that enhance learning and prepare students for an AI-integrated future.

- Engage with students as partners in exploring innovative AI applications in education.

The revised AIAS provides a more nuanced and flexible framework for integrating AI into assessment practices, reflecting our evolving understanding of AI’s role in education. We encourage educators in both K-12 and higher education to adopt and adapt the AIAS to increase transparency and clarity in AI use for learning and assessment.

Application of the month: Adobe Firefly v3

For a long time, Adobe’s Firefly has been my go-to image generator. If you haven’t read my blog at Leonfurze.com then you’ve seen Firefly images as blog headers and artwork.

Although Firefly is perhaps not as powerful as competitors like Midjourney, it has some advantages for educators which make it a very interesting application.

First, many K-12 education providers already have an Adobe license. If your school has Adobe CC, then all your staff and students (even K-6) already have access to Firefly. I even get access through my Deakin University student account, so it’s likely that many students will continue to use Firefly if they enter tertiary education.

It’s also incredibly easy to use. Like all Adobe products, Firefly is designed for creators. But unlike software like Premiere Pro or Photoshop the learning curve is incredibly shallow. Features such as the style and medium ‘tags’ are a great addition and make it easy to vary your creations with the click of a button (or several).

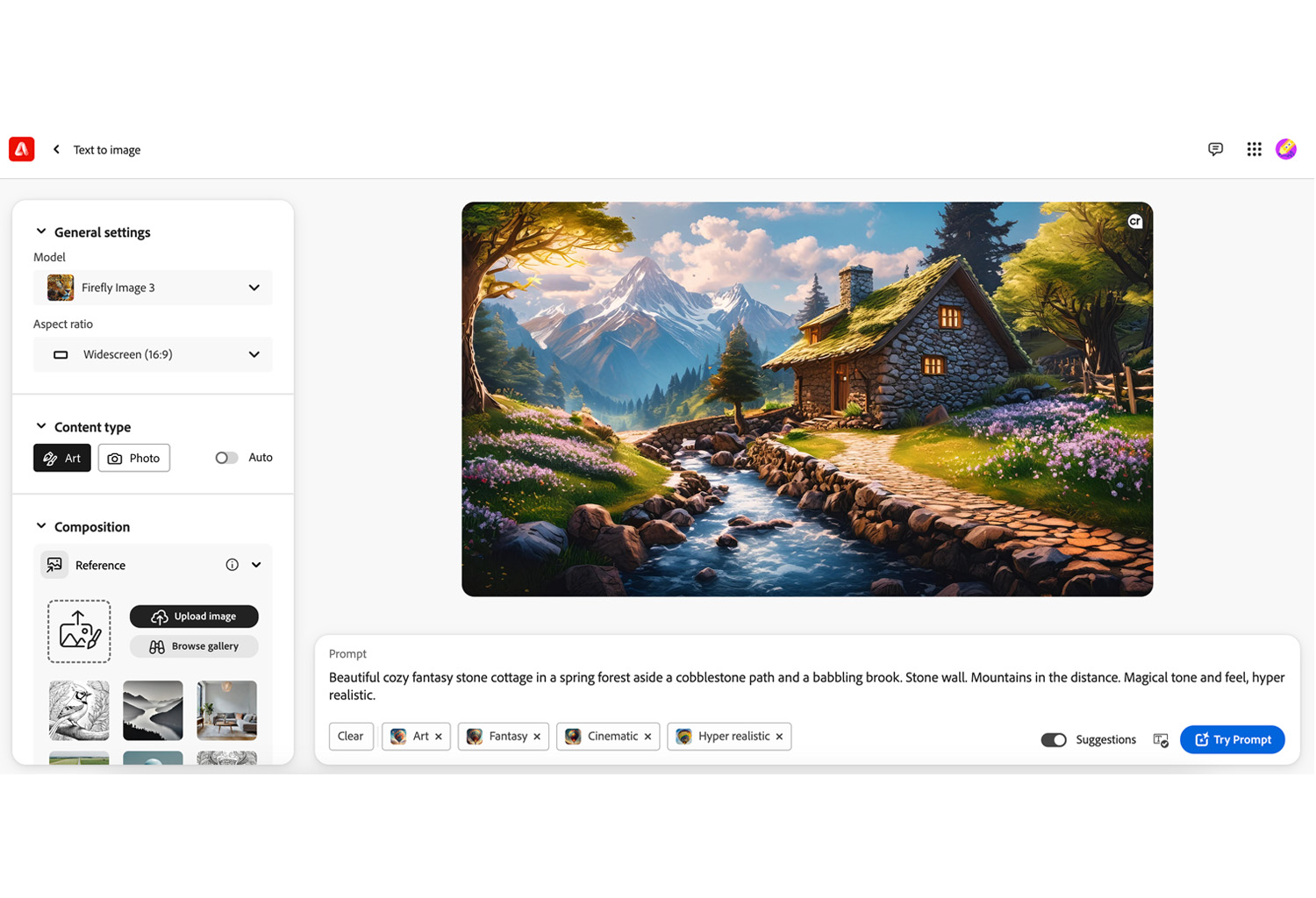

If you want to get more technical, you can upload style and structure references which help guide the model to a particular composition or visual style. And once you’re happy with your image, you can “generate similar” to create variations or hit “add text” which sends the image to Adobe Express for further editing including fonts and other design features. I’ll explain these features in the following section.

Speak to your teaching and learning or ICT staff to find out if your school has an Adobe license. If you do, just head to Firefly.adobe.com and log in with your school email address.

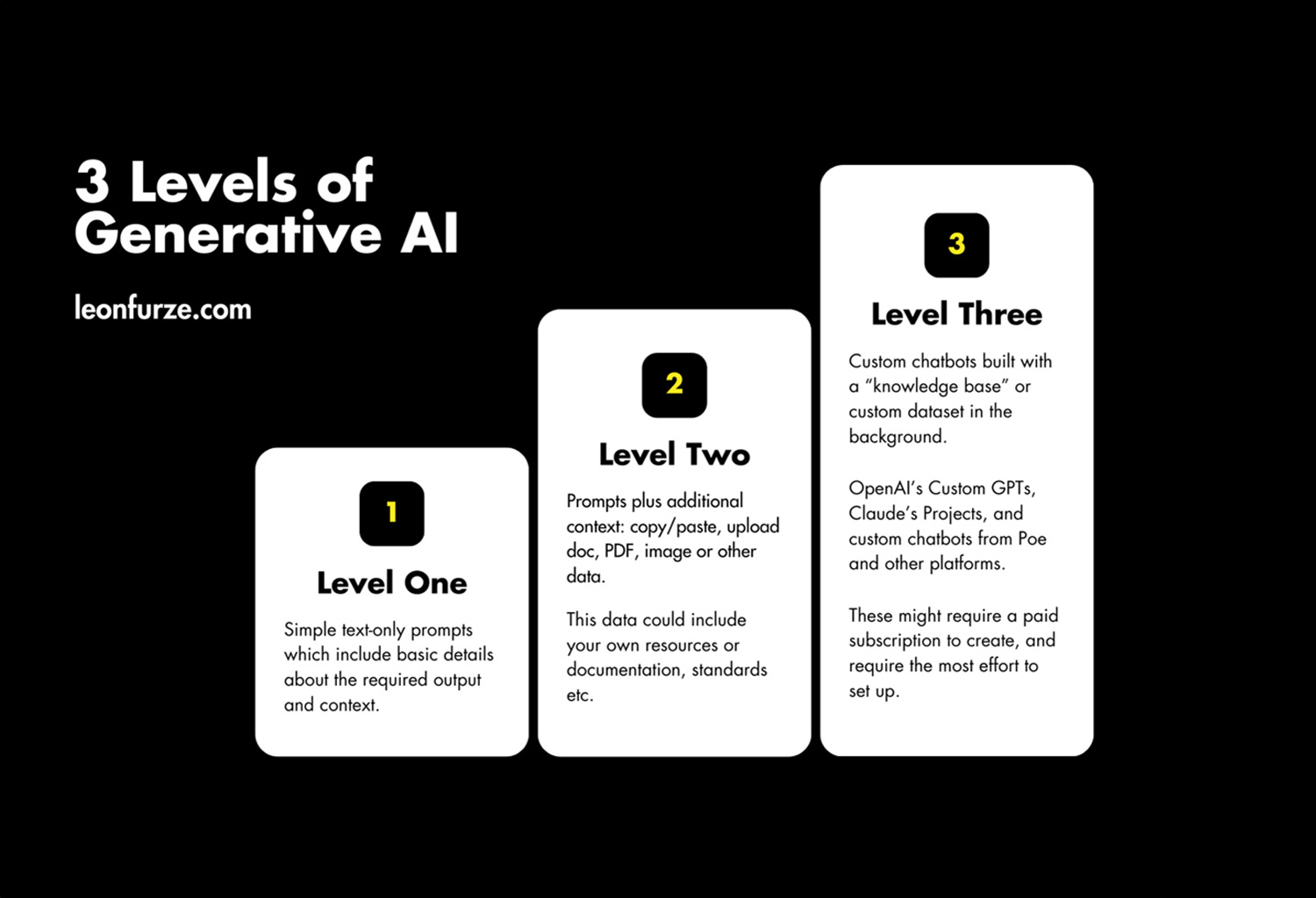

3 levels of prompting

In this section of the newsletter I explore ways to use GenAI technologies at ‘three levels’ from text-only prompts, to text + contextual materials, and finally to custom chatbots. Sticking with the theme of image generation, I will explore some Level 1 and 2 uses of Adobe Firefly. Although you can’t (yet) create custom Firefly chatbots, you can achieve some great outcomes with Level 1 and 2 style prompts.

Level 1: Basic Prompts

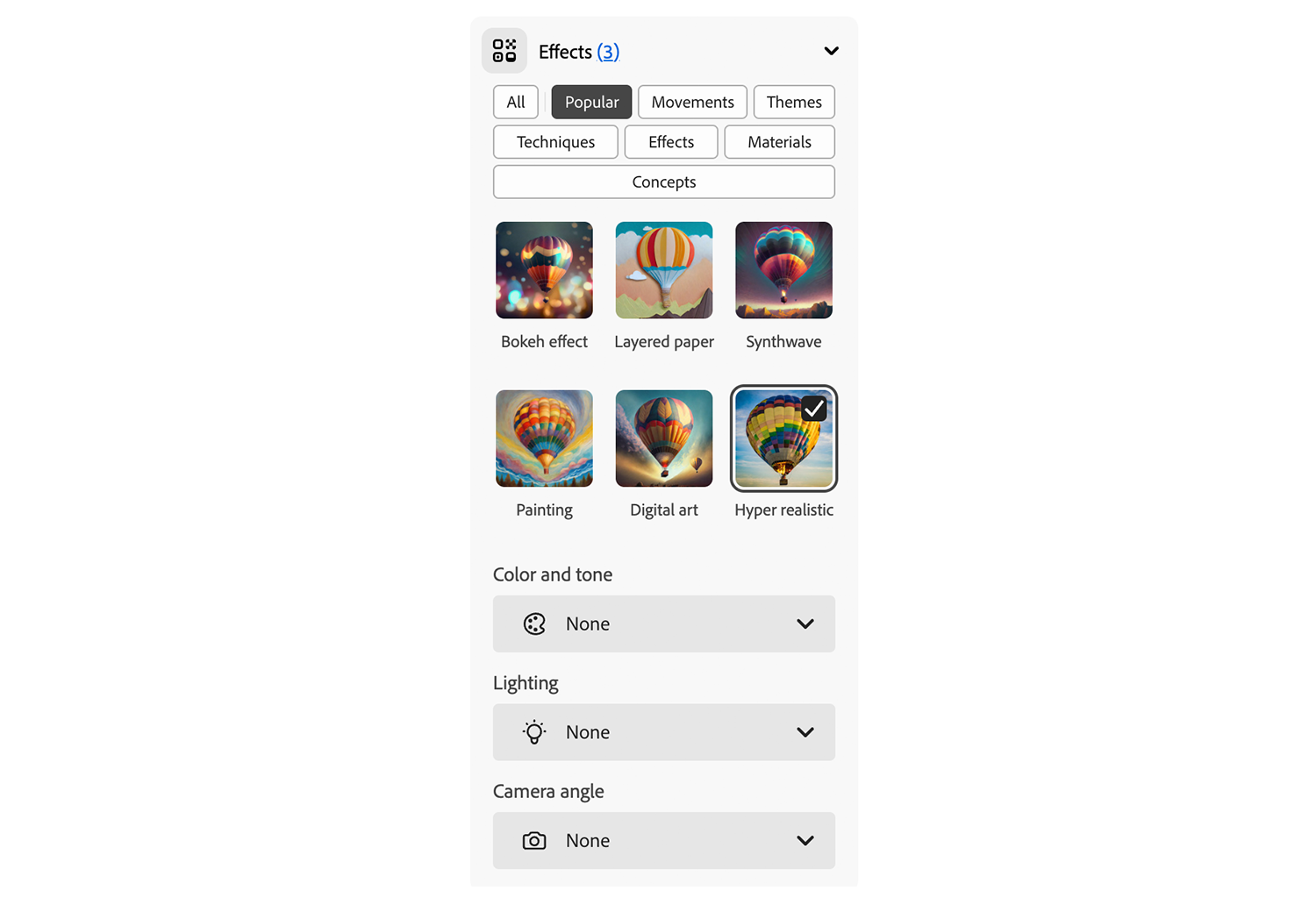

In Firefly, the prompt can be typed directly into the chat box and used as-is, without refinement. Or, you can combine the prompt with ‘Effects’ such as art styles and different lighting.

For example, you could use a detailed text-only prompt, such as:

‘Beautiful cozy fantasy stone cottage in a spring forest aside a cobblestone path and a babbling brook. Stone wall. Mountains in the distance. Magical tone and feel, hyper realistic.’

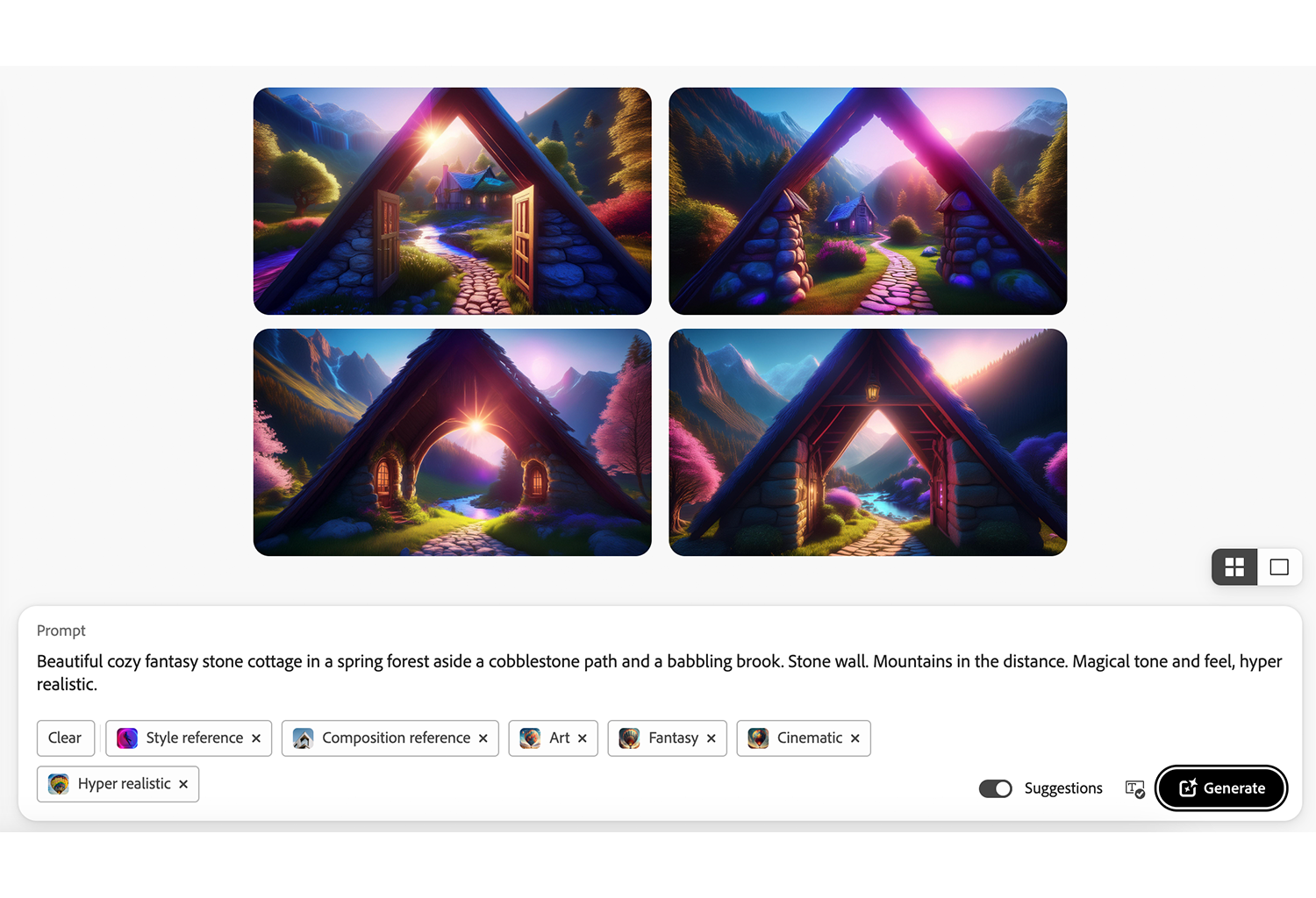

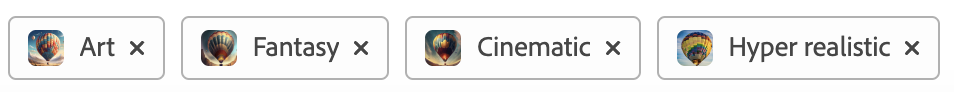

Or, you could combine that detailed text prompt with a series of ‘effects’ tags to get an even more specific outcome, for example:

Level 2: Style and structure prompts

In text-based LLMs like ChatGPT and Claude, ‘Level 2’ prompting means uploading additional contextual materials such as documents and PDFs. With image generation, it means uploading reference materials for style and composition.

In Adobe Firefly, it is possible to upload reference materials or select from a library. These have two functions:

- Composition references control aspects such as outline, shape, and depth

- Style references control colour and visual style

If we take the prompt above as an example, but add a style reference of bright neon colours and a composition reference of an architectural photograph, it changes the image dramatically:

Research Roundup: Deepfakes

“Recent studies have explored the potential and limitations of deepfake and synthetic media technologies and their implications for education.”

Recent studies have explored the potential and limitations of deepfake and synthetic media technologies and their implications for education. While deepfakes show promise for certain aspects of educational content creation and accessibility, current research suggests they also pose significant risks in terms of misinformation, academic integrity and student wellbeing. Studies have examined deepfake detection methods, potential benefits and risks, and the ethical implications of this rapidly evolving technology.

Key findings

- Deepfakes are AI-generated media that can realistically depict individuals saying or doing things they never actually said or did (Kietzmann et al., 2020).

- The technology behind deepfakes typically involves deep learning procedures using Generative Adversarial Networks (GANs) or advanced diffusion models – the same technologies which power image generation models (Appel & Prietzel, 2022).

- Human ability to detect deepfakes is limited, with one study finding listeners correctly identified audio deepfakes only 73% of the time (Mai et al., 2023).

- Deepfakes have potential positive applications in education, including personalised learning, accessibility support, and creating engaging educational content (Caporusso, 2021; Westerlund, 2019).

- However, deepfakes also pose risks of misinformation, non-consensual content creation, and erosion of trust in educational institutions (Citron & Chesney, 2019; Harris, 2021).

- There is a serious and growing concern about deepfakes being used to create child sexual abuse material (CSAM) (Hern, 2024).

Implications for education

The findings from these studies have several important implications for education. First, while deepfake technologies may offer potential benefits for creating personalised and accessible learning content, they should not be relied upon without careful consideration of the ethical implications and potential for misuse.

Secondly, educators need to be aware of and actively address the potential risks of deepfakes, particularly those related to misinformation, non-consensual content creation, and cyberbullying. This is especially important in diverse educational settings where students may be more vulnerable to the negative impacts of deepfakes.

Lastly, there may be pedagogical value in incorporating education about deepfakes into media literacy curricula. For example, teaching students about how deepfakes are created and detected could help them develop critical thinking skills and resilience against misinformation.

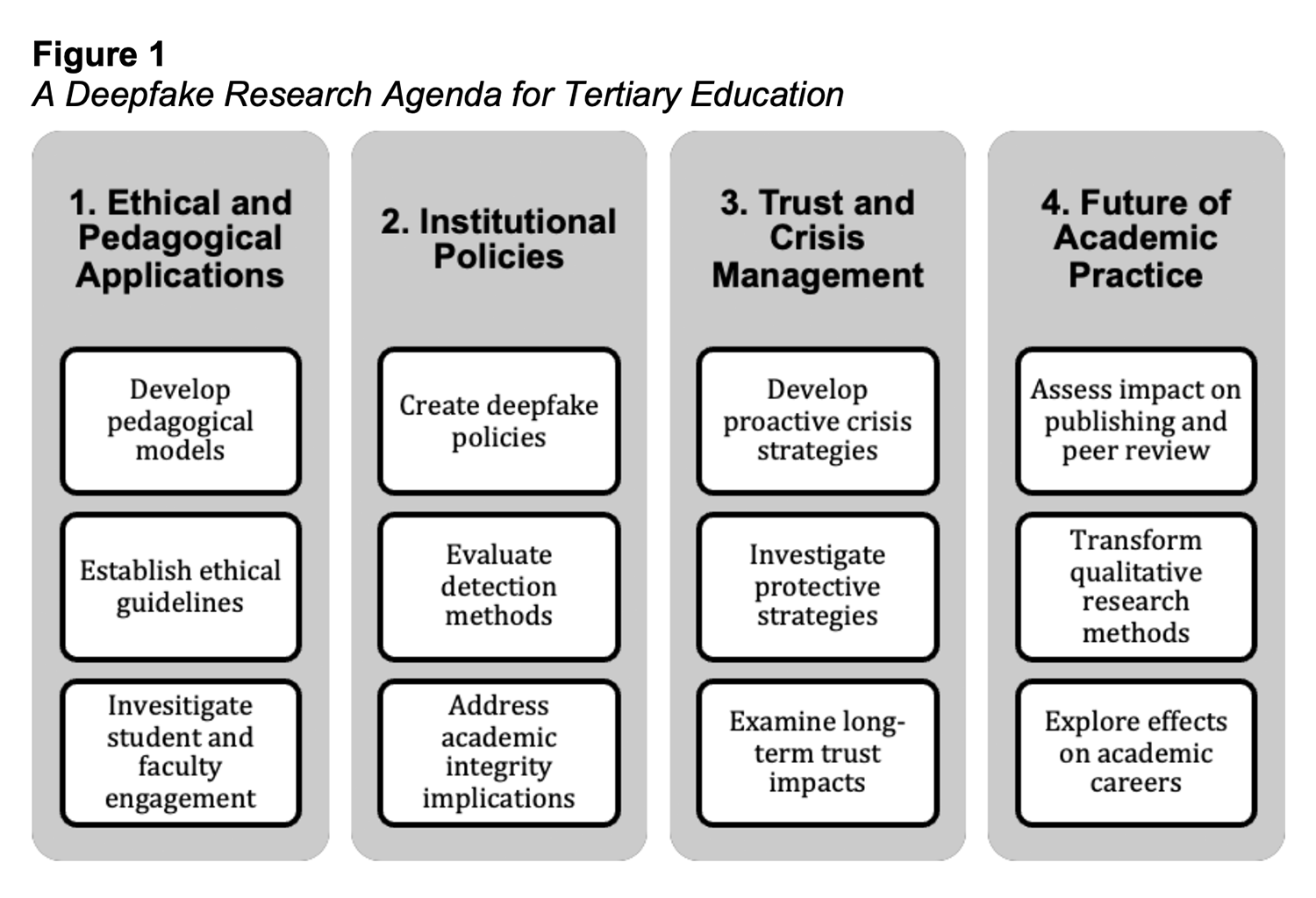

In recognition of the importance of this topic, we have recently set a research agenda for deepfakes in education. This agenda focuses on four key areas: ethical and pedagogical applications of synthetic media in learning, institutional policies for deepfake management, impact on institutional trust and crisis management, and the future of academic practice in light of deepfake technologies.

References

- Appel, M., & Prietzel, F. (2022). The detection of political deepfakes. Journal of Computer-Mediated Communication, 27(4), zmac008.

- Caporusso, N. (2021). Deepfakes for the Good: A Beneficial Application of Contentious Artificial Intelligence Technology. In T. Ahram (Ed.), Advances in Artificial Intelligence, Software and Systems Engineering (Vol. 1213, pp. 235–241). Springer International Publishing.

- Citron, D., & Chesney, R. (2019). Deep Fakes: A Looming Challenge for Privacy, Democracy and National Security. California Law Review, 107(6), 1753.

- Harris, K. R. (2021). Video on demand: What deepfakes do and how they harm. Synthese, 199(5), 13373–13391.

- Hern, A. (2024, March 17). Labour considers ‘nudification’ ban and cross-party pledge on AI deepfakes. The Guardian.

- Jacobsen, B. N., & Simpson, J. (2023). The tensions of deepfakes. Information, Communication & Society, 0(0), 1–15.

- Kietzmann, J., Lee, L. W., McCarthy, I. P., & Kietzmann, T. C. (2020). Deepfakes: Trick or treat? Business Horizons, 63(2), 135–146.

- Mai, K. T., Bray, S., Davies, T., & Griffin, L. D. (2023). Warning: Humans cannot reliably detect speech deepfakes. PLOS ONE, 18(8), e0285333.

- Roe, J., Perkins, M., Furze, L. (2024) Deepfakes and Higher Education: A Research Agenda and Scoping Review of Synthetic Media. Journal of University Teaching and Learning Practice, Advanced Online Publication. https://doi.org/10.53761/2y2np178.

- Westerlund, M. (2019). The Emergence of Deepfake Technology: A Review. Technology Innovation Management Review.

Upcoming professional development

Q&A

Every month we’ll be inviting Independent school teachers and leaders to ask questions, which Leon will (try to) answer in the following issue. To send your questions, requests, or feedback, please contact Leon at leonfurze@gmail.com.

Stay tuned for the next edition in ISV’s Latest in Learning newsletter.