AI Insights for Independent schools,

August 2024

ChatGPT-4o, 3 levels of prompting and using AI to assess written outcomes - all this (and more) in AI Insights.

13 min read

Welcome to the second issue of AI Insights for Independent schools, your monthly guide to the rapidly changing world of Generative AI in K-12 education. This issue picks up where we left off last month and looks at AI tools, research and practical strategies.

In the first issue, I introduced the AI Assessment Scale (AIAS) and explored the capabilities of Anthropic’s capable Large Language Model, Claude 3.5 Sonnet. Although Claude is personally my most-used app, this month I’ll be turning my attention to the most popular AI model and the one which kick-started the current AI hype cycle: OpenAI’s ChatGPT.

ChatGPT-4o, the current model for free and paid users, represents a significant leap forward in the technology, with enhanced capabilities across text, voice and vision modalities. For us as educators, this means more powerful tools for classroom instruction and administrative tasks. In this issue, I’ll explore how ChatGPT-4o can be used in educational settings, from language learning to assisting with mathematical tasks and image-based work.

My ‘3 Levels of Prompting’ section this month will focus on creating educational resources using ChatGPT-4o, demonstrating how you can use its advanced capabilities such as file upload (a “Level 2” prompt) to develop engaging and effective learning materials.

In the research roundup, I’ll be examining the latest studies on AI-assisted assessment and grading, a topic of growing importance as AI tools become more prevalent in our educational settings.

As always, the aim is to provide you with practical insights, ethical considerations and actionable strategies to integrate these cutting-edge AI tools into your teaching practices. Whether you’re an AI early-adopter or just beginning to explore its potential in education, I’ve designed this newsletter to keep you informed, inspired and sometimes a little bit cautious.

In this issue

Spotlight: University of Sydney’s ‘Two Lanes’ approach to assessment

In our first issue, I introduced you to the AI Assessment Scale (AIAS), a tool for thoughtfully integrating AI into assessment practices. This month, I’d like to shine a spotlight on another innovative and increasingly popular approach to assessment with AI: the University of Sydney’s ‘Two Lanes’ model.

Image via Adobe Firefly v3. Prompt: Illustration of two motorway highway lanes running through a traditional college library, minimalist, colourful.

The 'Two Lanes' model

The University of Sydney has developed a ‘two-lane’ approach to assessment design that addresses both the challenges and opportunities presented by generative AI:

- Lane 1 – Assessment of learning

These assessments are designed to ensure students have mastered essential skills and knowledge. They are typically supervised and focus on the ‘assessment of learning’. - Lane 2 – Assessment as learning

These assessments motivate students to learn and teach them to engage responsibly with AI. They focus on productive and responsible participation in an AI-integrated society.

Comparing to the AI Assessment Scale (AIAS)

While the AIAS provides a gradient of AI involvement in assessments (from no AI to full AI), the Two Lanes approach offers a more binary distinction. However, both models share the goal of balancing traditional assessment methods with the integration of AI in education.

The AIAS’s levels 1 and 2 (No AI and AI-Assisted Brainstorming) align closely with Lane 1, while levels 3-5 (AI Editing, AI + Human Evaluation, and Full AI) correspond more to Lane 2 in the Sydney model.

Key principles

The University of Sydney’s approach is underpinned by six assessment principles:

- Promote learning and facilitate reflection

Assessments should not only evaluate outcomes but also actively promote learning. There’s a renewed emphasis on constructive and timely feedback to support this goal. Assessments should encourage students to reflect on their learning and make judgments about their own progress. - Clear communication

Assessment practices must be clearly communicated to students. This principle now emphasises helping students understand how assessment tasks relate to learning outcomes, creating a clearer connection between what they’re doing and what they’re meant to be learning. - Inclusivity, validity and fairness

This principle reaffirms a commitment to standards-based assessment while emphasising the need to moderate standards to ensure inclusivity and equity. It recognises the diverse needs of the student population and aims to create a level playing field for all learners. - Regular review

Assessments should be regularly reviewed and updated to align with new technologies, including AI. This principle also emphasises the importance of including student voices in the evaluation of assessments, ensuring that the assessment practices remain relevant and effective. - Integration into program design

This new principle focuses on integrating assessment and feedback across entire programs, supporting student development from enrolment to graduation. It stresses the need for trustworthy and coherent assessment tasks that validate students’ attainment at various points throughout their educational journey. Additionally, it underscores the importance of equipping students with skills for both their studies and their future careers, including proficiency with appropriate technologies. - Develop contemporary capabilities

This principle focuses on enabling students to develop and demonstrate skills with relevant technologies while ensuring academic integrity. This includes both unsupervised assessments that motivate learning (‘lane 2’ assessments) and supervised assessments that assure learning (‘lane 1’ assessments).

Implications for Independent schools

While developed for a university context, the Two Lanes approach offers valuable insights for K-12 educators:

- It provides a clear framework for balancing traditional assessment with AI integration.

- It emphasises the importance of preparing students for an AI-integrated future.

- It highlights the need for ongoing professional development in AI and assessment design.

Application of the Month: ChatGPT-4o

OpenAI’s latest release, ChatGPT-4o, represents a significant step forward in AI language model capabilities. While it builds on the foundations of its predecessors, it introduces several new features that could have implications for educational settings including multimodal uses. OpenAI also decided to make GPT-4o the new base model, meaning that it is available to users with both free and paid accounts.

Here’s a balanced look at what ChatGPT-4o brings to the table:

Key features

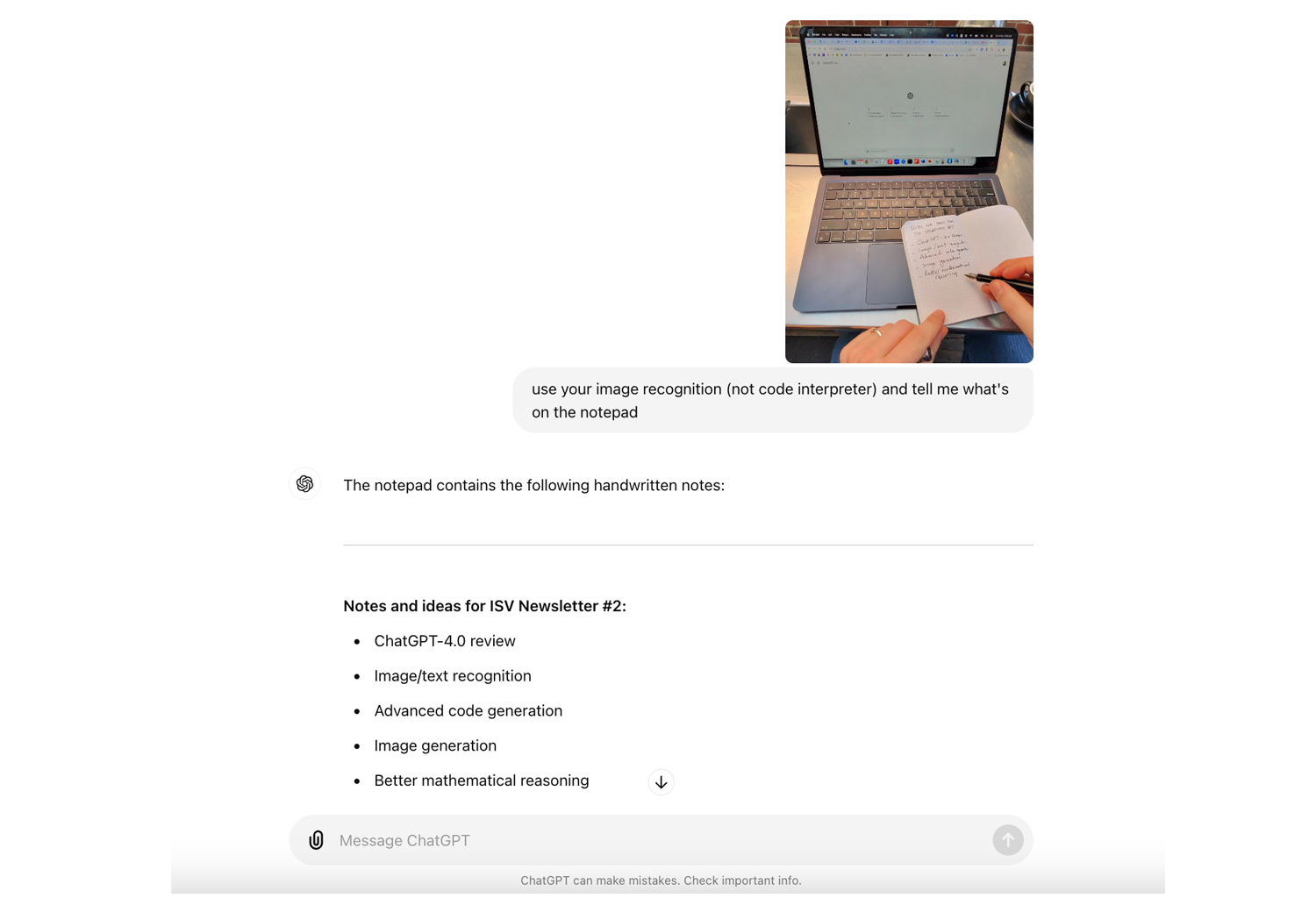

- Multimodal capabilities

ChatGPT-4o can process and generate content across text, audio, and image modalities. This could potentially allow for more diverse types of educational content and interactions. It uses the GPT-4v ‘vision’ model for image and text recognition, and DALL-E 3 for image generation. - Document upload

ChatGPT-4o allows users to upload multiple file types into the prompt, including PDF, Docx, and spreadsheets. - Improved language understanding

The model shows enhanced comprehension across multiple languages, which could be beneficial in multilingual educational environments. - Internet connection

Using Microsoft’s Bing search, ChatGPT now has an internet connection and can therefore be used for more factual and reliable information. This was formerly only available to paid users. - Enhanced mathematical reasoning

While still prone to errors, the model demonstrates improved capabilities in areas like mathematical reasoning and coding tasks. It has been ‘fine-tuned’ on additional maths resources. Code interpreter also gives it the capability of writing and running more advanced code.

Using GPT-4v, ChatGPT can extract the text from handwritten notes like this.

Potential educational applications

- Language learning

The model’s multilingual capabilities could assist in language instruction and translation tasks. - Multimedia content analysis

Its ability to process images alongside text could be useful for analysing complex educational materials. - Accessibility support

The audio processing capabilities – including the forthcoming ‘Advanced Voice Mode’, might aid in creating more accessible learning materials for students with diverse needs. - Programming education

Improved coding capabilities could make it a more reliable tool for teaching and learning programming concepts.

Limitations and considerations

Despite these advancements, it’s crucial to approach ChatGPT-4o with a critical eye:

- Accuracy concerns

Like all AI models, ChatGPT-4o can produce errors or ‘hallucinations’, especially when dealing with specialised or current information. This remains true even when using the internet or uploaded documents. - Ethical considerations

The use of any AI in education raises important questions about privacy, data security and the appropriate balance between AI assistance and independent learning. Since OpenAI’s ‘free’ account collects data by default, it is always worth reading the terms carefully, and understanding the privacy settings. - OpenAI as a company

OpenAI is a constant topic of speculation and controversy because of their place in the industry and ongoing staffing issues. Just this month, another group of senior leaders have left the company. It is best to remember that all these AI companies are in flux and shouldn’t be built too deeply into school systems.

ChatGPT-4o offers useful possibilities for enhancing educational practices, particularly in areas like language learning, content creation and accessibility. However, its integration into educational settings should be approached thoughtfully, with careful consideration of its limitations and potential impacts on learning outcomes.

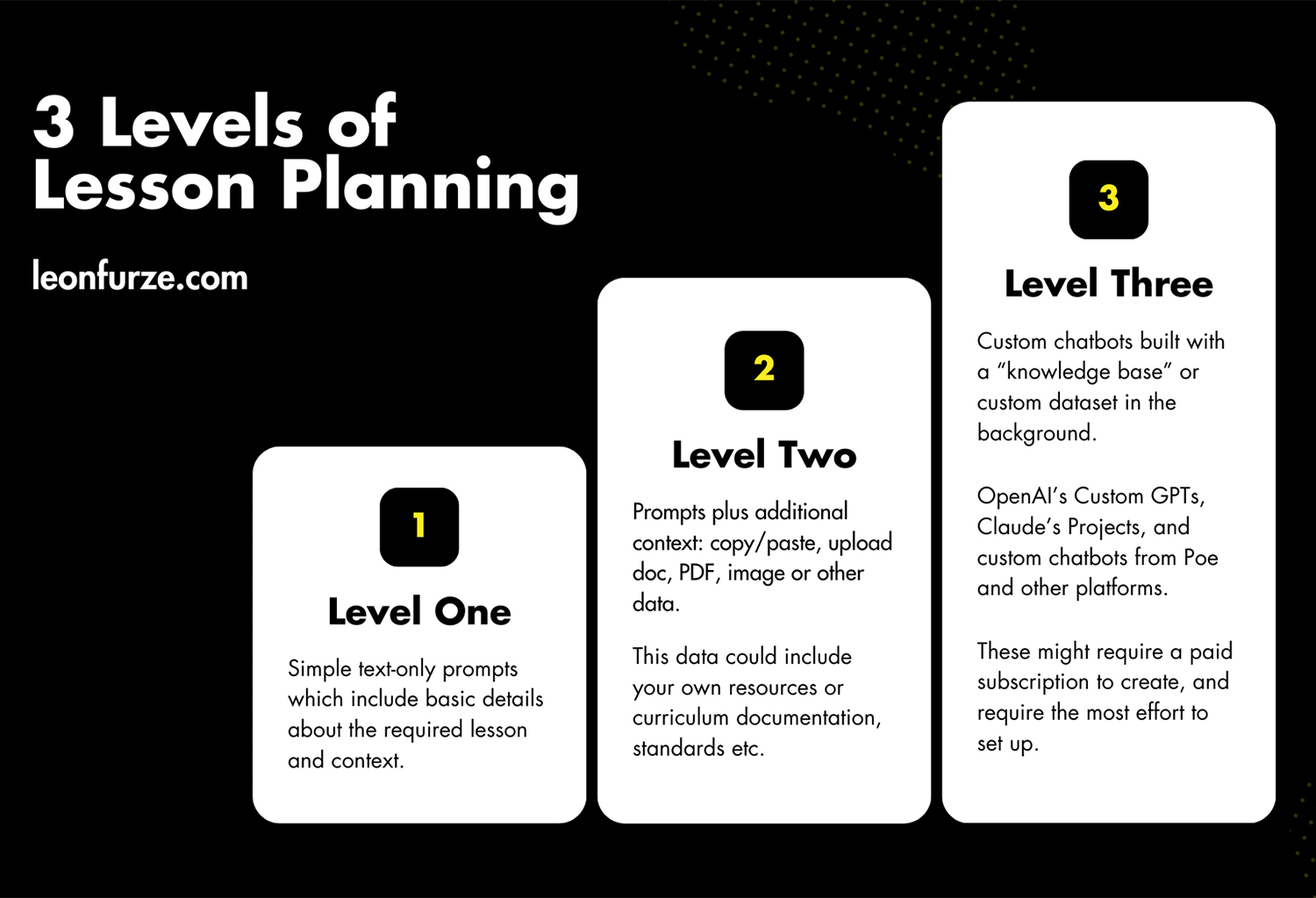

3 levels of prompting

We can use ChatGPT-4o’s capabilities to create various educational resources. Here, I’ll outline three levels of prompting, from basic to advanced, demonstrating how to use this tool effectively for resource creation.

For more information on the ‘3 levels’ approach, make sure you read Issue 1 of this newsletter from July 2024.

Level 1: Basic prompts

At this level, we use straightforward prompts to generate simple educational content. These prompts are direct and require minimal context.

Example: ‘Create a list of 10 key terms related to photosynthesis for a Year 9 Science class. Use browsing to return accurate references.’

ChatGPT-4o can quickly generate a list of terms, providing a starting point for vocabulary lessons or study guides. However, the output at this level may require significant review and modification to suit your specific needs. Adding the additional phrase ‘use browsing to…’ will make it more reliable.

Level 2: Context-based prompts

These prompts provide more context and specific requirements, resulting in more tailored and useful outputs.

Example: ‘Design a 45-minute lesson plan for a Year 11 English Literature class on Shakespeare’s’ Macbeth. Focus on Act 1, Scene 7. Include learning objectives, a warm-up activity, main discussion points, and a closing reflection exercise. Consider the Victorian Curriculum standards (attached) for this year level. <upload PDF/doc of curriculum standards>’

With this level of detail, ChatGPT-4o can generate a much more comprehensive and relevant lesson plan. The output will likely require less modification, though you should obviously still review and adjust it to match your teaching style and class needs.

Level 3: Custom chatbots and projects

At this most sophisticated level, educators can leverage advanced AI tools like Custom GPTs in ChatGPT Plus or Claude Projects (both paid products, unfortunately) to create more tailored and complex educational resources.

Example: Using a Custom GPT for Curriculum Design

Imagine you’ve created or accessed a Custom GPT specifically designed for curriculum development in your subject area. This GPT has been trained on relevant educational standards, best practices in pedagogy, and subject-specific content by providing additional documents in the “knowledge base”.

Prompt: ‘Using the Victorian Curriculum for Year 10 Science, design a term-long unit on ‘Earth Systems’. Include weekly topics, key learning objectives, suggested activities, and assessment ideas. Consider cross-curricular connections with Geography.’

The Custom GPT, with its additional knowledge, can produce a comprehensive unit plan that’s closely aligned with curriculum standards and incorporates cross-disciplinary elements.

Research roundup: Using AI to assess written outcomes

“The limitations in AI’s ability to accurately evaluate higher-quality writing and complex ideas mean that human expertise remains crucial in assessing student work, particularly for high-stakes assignments or exams.”

Recent studies have explored the potential and limitations of using artificial intelligence (AI) to assess student writing. While AI shows promise for certain aspects of writing assessment, particularly in providing quick feedback for early drafts, current research suggests it is not yet a reliable replacement for human evaluation, especially in high-stakes contexts.

Studies have examined AI’s accuracy compared to human raters, potential biases, pedagogical implications and limitations in understanding complex writing issues. This research highlights both the opportunities and challenges of integrating AI into writing assessment practices in education.

Key findings

- AI-generated scores were not statistically significantly different from human scores overall, but AI was less likely to give very high or very low scores and struggled more with accuracy for higher-quality essays (Tate et al., 2024).

- Human raters provided higher quality feedback than ChatGPT in four out of five critical areas: clarity of directions for improvement, accuracy, prioritisation of essential features, and use of a supportive tone (Steiss et al., 2024).

- Language models can exhibit covert racism in the form of dialect prejudice, particularly against African American English, which affected AI decisions about people’s character, employability, and criminality based solely on dialect features (Hofmann et al., 2024).

- AI often struggles to identify complex issues in writing, tending to give conservative or formulaic feedback (Furze, 2024).

- Creating and evaluating AI feedback bots can be a valuable metacognitive exercise for students, helping them understand writing criteria and feedback styles (Steiss et al., 2024).

Implications for secondary education

The findings from these studies and articles have several important implications for secondary education. First, while AI tools may offer potential time-saving benefits for teachers in providing initial feedback on student writing, they should not be relied upon for summative assessment or grading.

The limitations in AI’s ability to accurately evaluate higher-quality writing and complex ideas mean that human expertise remains crucial in assessing student work, particularly for high-stakes assignments or exams. As these models develop, we will no doubt see research which demonstrates the more advanced capabilities of models like GPT-4o and Claude 3.5 Sonnet, but we should remain cautious.

Secondly, educators need to be aware of and actively address the potential biases in AI writing assessment tools, particularly those related to dialect and language variation. This is especially important in diverse classrooms where students may use non-standard dialects or be English language learners. Teachers should critically evaluate AI feedback and ensure it does not reinforce linguistic biases or unfairly disadvantage certain groups of students.

Lastly, there may be pedagogical value in incorporating AI tools into writing instruction in ways that develop students’ critical thinking skills. For example, having students create and evaluate AI feedback bots, or critically analyse AI-generated feedback on their writing, could help them better understand writing criteria and develop metacognitive skills.

Such activities should be carefully designed and supervised to ensure students don’t become overly reliant on AI suggestions and continue to develop their own voice and writing skills.

References

Furze, L. (2024, May 27). Don’t use GenAI to grade student work. Leon Furze. https://leonfurze.com/2024/05/27/dont-use-genai-to-grade-student-work

Hofmann, V., Kalluri, P. R., Jurafsky, D., & King, S. (2024). Dialect prejudice predicts AI decisions about people’s character, employability, and criminality. arXiv. https://doi.org/10.48550/arXiv.2403.00742

Kynard, C. (2023). When robots come home to roost: The differing fates of black language, hyper-standardization, and white robotic school writing (Yes, ChatGPT and his AI cousins). Education, Liberation & Black Radical Traditions for the 21st Century. http://carmenkynard.org/when-robots-come-home-to-roost

Marino, M. C., & Taylor, P. R. (2024). On feedback from bots: Intelligence tests and teaching writing. Journal of Applied Learning & Teaching, 7(2), 1-8. https://doi.org/10.37074/jalt.2024.7.2.22

Popenici, S. (2023). The critique of AI as a foundation for judicious use in higher education. Journal of Applied Learning and Teaching, 6(2), 378-384. https://doi.org/10.37074/jalt.2023.6.2.4

Steiss, J., Tate, T., Graham, S., Cruz, J., Hebert, M., Wang, J., Moon, Y., Tseng, W., Warschauer, M., & Olson, C. B. (2024). Comparing the quality of human and ChatGPT feedback of students’ writing. Learning and Instruction, 91, 101894. https://doi.org/10.1016/j.learninstruc.2024.101894

Tate, T. P., Steiss, J., Bailey, D., Graham, S., Moon, Y., Ritchie, D., Tseng, W., & Warschauer, M. (2024). Can AI provide useful holistic essay scoring? Computers and Education: Artificial Intelligence, 7, 100255. https://doi.org/10.1016/j.caeai.2024.100255

Upcoming professional development

AI Leader Network

Join a network of AI leaders to build connections and learn from and with each other.

Independent Schools Victoria’s Artificial Intelligence (AI) Leader Network is designed to foster collaboration and communication among those with key oversight of AI at their school.

The network serves as a forum for leaders to come together, share insights and work towards enhancing the educational experience for all school communities.

Key takeaways:

- boost your leadership vision

- fresh and effective perspectives and ideas

- connection with fellow AI leaders

- actionable ideas to implement right away.

The first meeting will be held online on Wednesday 11 September, from 3.45 pm to 4.45 pm. ISV Member Schools only.

Q&A

Every month we’ll be inviting Independent school teachers and leaders to ask questions, which Leon will (try to) answer in the following issue. To send your questions, requests, or feedback, please contact Leon at leonfurze@gmail.com.

Stay tuned for the next edition in ISV’s Latest in Learning newsletter.